Another important resource that limits scalability is memory. We, therefore,

measured the memory consumed by entities and by queued events in each of the

systems. Measuring the memory footprint of entities involves the allocation of

![]() empty entities and observing the size of the operating system process, for

a wide range of

empty entities and observing the size of the operating system process, for

a wide range of ![]() . In the case of Java, we invoke a garbage collection sweep

and then request an internal memory count. Analogously, we queue a large

number of events and observe their memory requirements. The entity and event

memory results are plotted in Figure 17. The base memory footprint

of each of the systems is less than 10 MB. Asymptotically, the process

footprint increases linearly with the number of entities or events, as

expected.

. In the case of Java, we invoke a garbage collection sweep

and then request an internal memory count. Analogously, we queue a large

number of events and observe their memory requirements. The entity and event

memory results are plotted in Figure 17. The base memory footprint

of each of the systems is less than 10 MB. Asymptotically, the process

footprint increases linearly with the number of entities or events, as

expected.

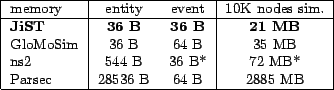

JiST performs well with respect to memory requirements for simulation entities. It performs comparably with GloMoSim, which uses node aggregation specifically to reduce Parsec's memory consumption. A GloMoSim ``entity'' is merely a heap-allocated object containing an aggregation identifier and an event-scheduling heap. In contrast, each Parsec entity contains its own program counter and logical process stack, the minimum stack size allowed by Parsec being 20 KB. In ns2, we allocate the smallest split object possible, an instance of TclObject, responsible for binding values across the C and Tcl memory spaces. JiST achieves the same dynamic configuration capability without requiring the memory overhead of split objects.

JiST also performs well with respect to event memory requirements. Though they store slightly different data, the C-based ns2 event objects are exactly the same size as JiST events. On the other hand, Tcl-based ns2 events require the allocation of a new split object per event, thus incurring the larger memory overhead above. Parsec events require twice the memory of JiST events.

|

The memory requirements per entity,

![]() , and per event,

, and per event,

![]() , in each of the systems are tabulated in Figure 17.

We also compute the memory footprint within each system for a simulation of

10,000 nodes, assuming approximately 10 entities per node and an average of

5 outstanding events per entity. In other words, we compute:

, in each of the systems are tabulated in Figure 17.

We also compute the memory footprint within each system for a simulation of

10,000 nodes, assuming approximately 10 entities per node and an average of

5 outstanding events per entity. In other words, we compute:

![]() . Note that these

figures do not include the fixed memory base for the process, nor the actual

simulation data. These are figures for empty entities and events alone, thus

showing the overhead imposed by each system.

. Note that these

figures do not include the fixed memory base for the process, nor the actual

simulation data. These are figures for empty entities and events alone, thus

showing the overhead imposed by each system.

Note also that adding simulation data would doubly affect ns2, since it stores data in both the Tcl and C memory spaces. Moreover, Tcl encodes this data internally as strings. The exact memory impact thus varies from simulation to simulation. As a point of reference, regularly published results of a few hundred wireless nodes occupy more than 100 MB and simulation researchers have scaled ns2 to around 1,500 non-wireless nodes using a process with a 2 GB memory footprint [19,16].